Over recent months, IREN has drawn significant attention — not only from the retail community but also from Wall Street and the broader financial media landscape. While the spotlight is long overdue, few investors truly understand what IREN actually is or what constitutes its moat.

IREN is not just another AI cloud provider, nor is it “just” a Bitcoin miner. From day one, the company has been architected to become one of the most dominant hyperscalers of the 21st century — one of the first true data center conglomerates.

IREN in a Nutshell

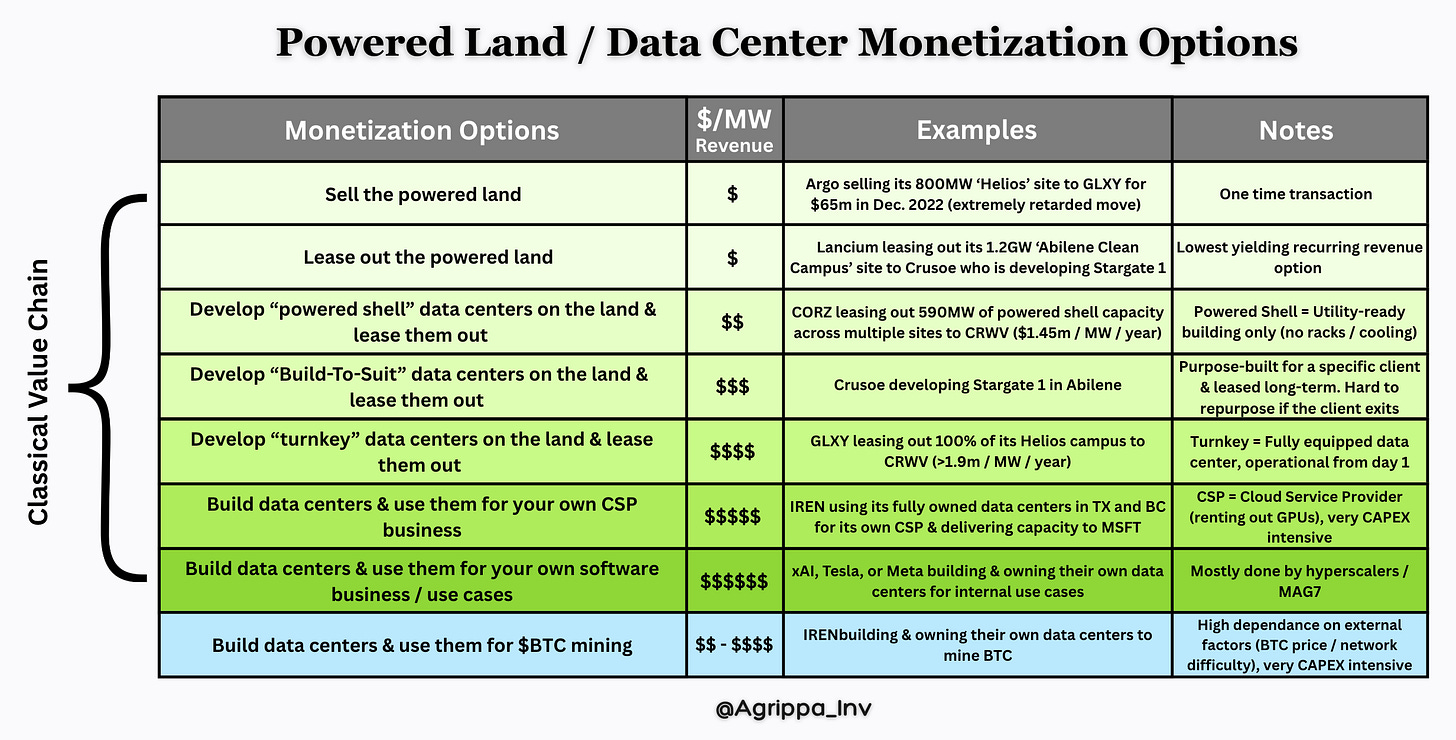

At its core, IREN is a data center company, whose sole prerogative is to monetize grid-connected power in the most lucrative manner — i.e., receiving the highest Dollar value per MW ($/MW) possible.

What truly sets IREN apart is that it owns the entire physical infrastructure stack: the land and interconnects, the high-voltage gear like transformers and substations, the data centers themselves, and the compute hardware inside them.

This level of vertical integration opens up virtually every power-monetization pathway across the sector’s value chain:

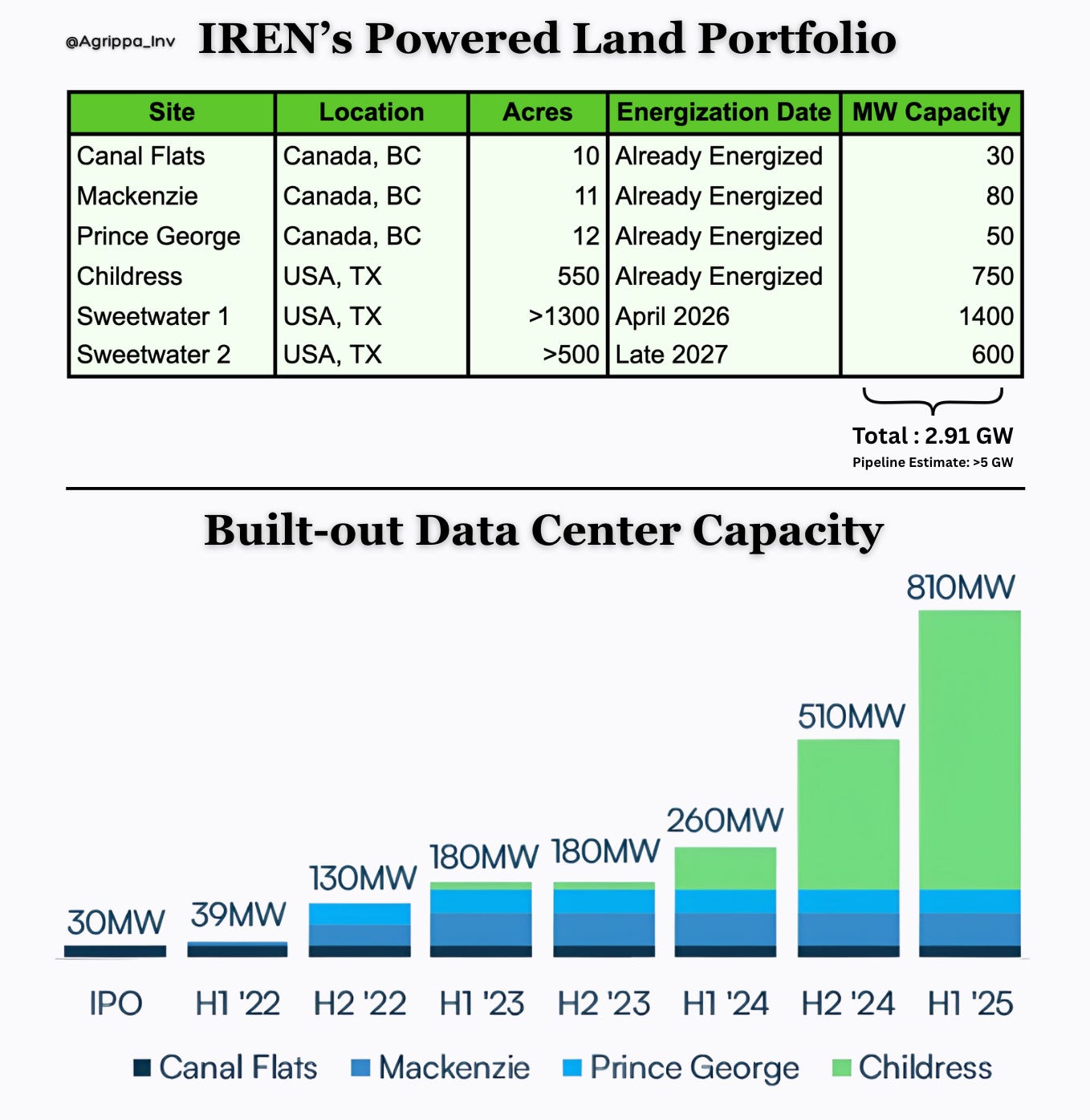

With a massive portfolio of 2.91 GW in secured, grid-connected power, IREN holds all the cards in this power-constrained market. Naturally, management is prioritizing one of the highest yielding monetization pathways — AI cloud (CSP) — with the recent $9.7 billion, five-year Microsoft contract marking a pivotal moment in the company’s history.

IREN’s Bitcoin Mining Segment

While most analysts and investors now associate IREN with its rapid emergence in AI cloud, its ascent in Bitcoin mining is just as remarkable.

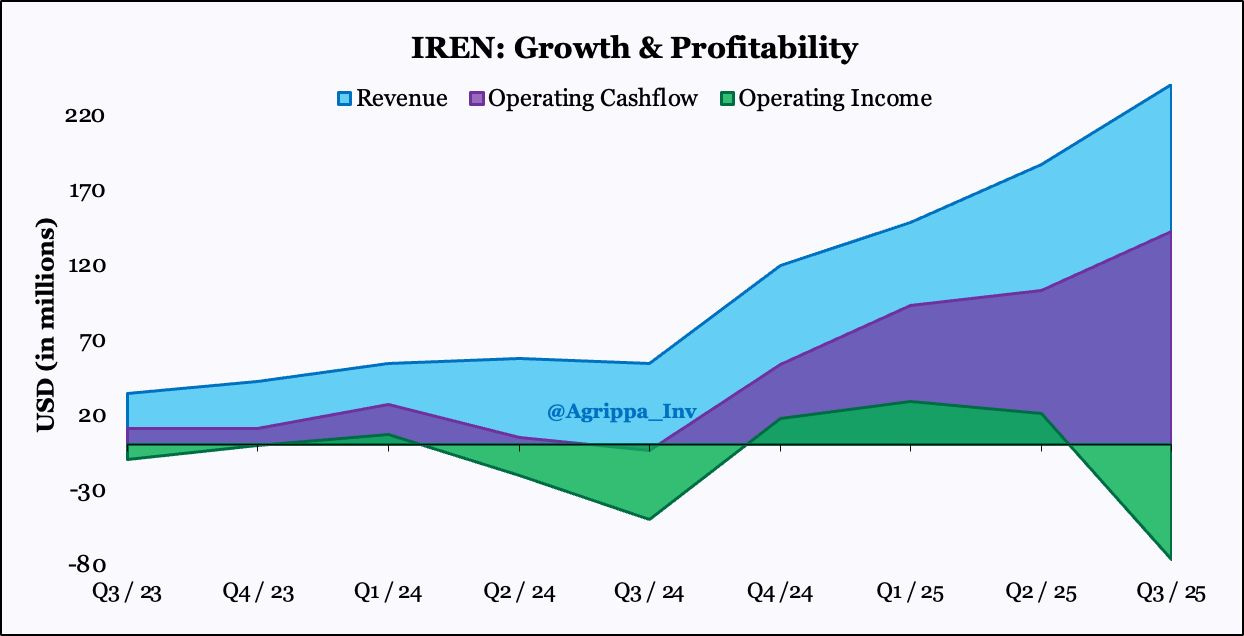

Over the past couple of years IREN quickly established itself as the most profitable and fastest-growing “BTC miner” of all time.

It now ranks among the top three largest publicly traded BTC miners — alongside MARA Holdings (MARA) and CleanSpark (CLSK) — with 50 exahash (EH) of mining compute.

However, a key differentiator is IREN’s vastly superior profitability and focus on cash flow. For one, IREN is the only “BTC miner” to have achieved multiple consecutive quarters of operating profitability during one of the worst BTC bull markets for the mining sector in history. No other miner has achieved operating profitability during this cycle.1

Note: Chart depicts quarterly data. Q3 data no longer accurately reflects the operating profitability of IREN’s Bitcoin mining segment, due to the company’s strong shift toward AI cloud (CSP) in recent months.In Q3, SG&A rose materially due to stock-based compensation and stock-price-linked performance equity awards — a direct byproduct of IREN’s ~10x share-price increase over recent quarters — which depressed operating income but did not affect operating cash flow (OCF).

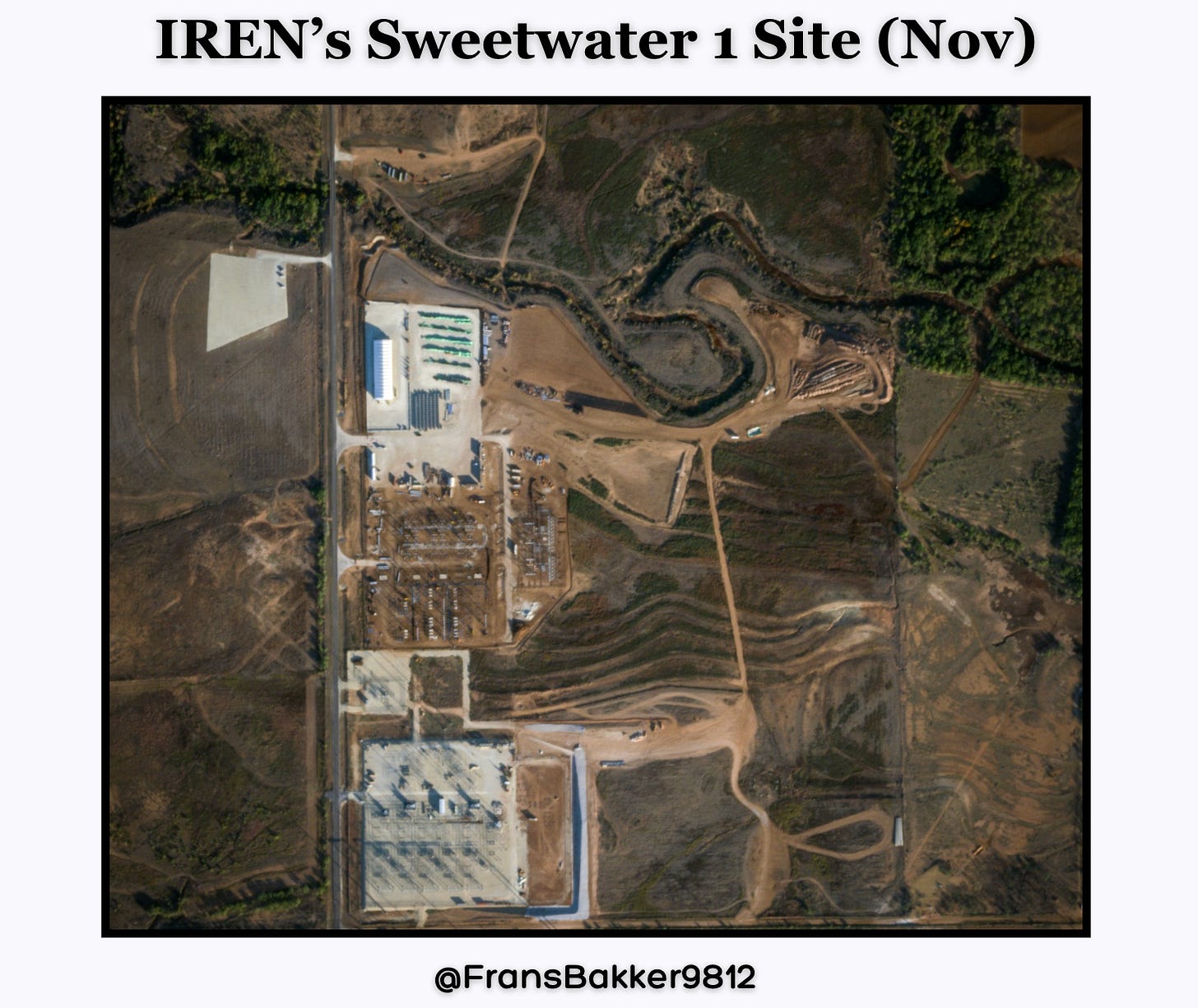

IREN’s superior margin profile is a combination of full vertical integration and its “giga-site” strategy — with most of its current operating capacity concentrated at its 750 MW Childress site.

Image by: Frans Bakker

Few companies in the global data center space can claim to operate individual sites at this scale. In fact, IREN is one of the only companies with multiple sites exceeding 500 MW of grid-connected power.

Rather than maintaining a fragmented footprint of smaller sites across various regions — each with its own staffing, security, and operational overhead — IREN has taken a hyper-concentrated approach. By clustering the bulk of its capacity at a small number of giga-sites, IREN reduces duplicated overhead costs like staffing, on-site maintenance, and support infrastructure. Everything from energy routing to fiber paths to network interconnects can be optimized within a single high-density campus. This strategic consolidation drives significantly lower OPEX per MW and enables IREN to achieve meaningful operating leverage as capacity scales — a major driver behind its expanding profit margins.

And importantly, this efficiency carries over across all of IREN’s verticals. Not just in BTC mining, but also increasingly in its AI related segments, where margin structure and operational efficiency are just as critical to long-term competitiveness.

Another way IREN differentiates itself in the BTC mining sector is that it's one of the only companies that does not “HODL”, i.e., holding Bitcoin on its balance sheet. It sells 100% of its mined BTC and reinvests every penny into expanding its vast power portfolio — creating a vicious hyper-growth flywheel.

While IREN initially started as a “BTC Miner”, management never saw it as just that. Since its IPO in 2021, it has always positioned itself as a data center company. BTC mining has always been just step one.

The primary reason IREN chose to start with BTC mining is that it was the most straightforward path to monetizing its large powered land portfolio. Pretty much all of the other monetization options require an end customer and in this industry, credibility and a strong track record of development execution are everything. BTC mining doesn’t have that hurdle; you mine for yourself — i.e., for your shareholders — no customers required.

However, with the full intention to eventually move into client-based data center services, IREN always selected and built its sites to be fully suitable for HPC (high-performance computing) purposes. For example, its massive sites in West Texas are strategically positioned on top of a major fiber backbone, enabling exceptionally fast latency of just 6 ms to Dallas (Infomart carrier hotel) — one of the largest interconnectivity hubs in the U.S. In comparison, Galaxy Digital’s (GLXY) 800 MW Helios site (also located in West-Texas), of which 100% is contracted to CoreWeave, achieves latencies of 10–15 ms to Dallas (according to their own specifications) — still good, but not as elite as IREN’s 6 ms.

In case you don’t know, low latencies — both externally to major network hubs like Dallas, and internally across GPU clusters — are critical for cloud services and AI workloads, where every millisecond can impact performance.

Now that IREN has reached 50 EH of BTC mining capacity, management has officially paused further expansion of this segment for the time being. While future growth in mining hasn’t been ruled out, the company currently sees far greater potential in the AI/HPC market — primarily due to the severe shortage of AI-ready data centers.

Having solidified itself as the most dominant and operationally efficient force in the BTC mining industry, IREN is now ready to fully commit to AI/HPC at an unprecedented scale.

If you want a fully in-depth look at IREN’s ascent in the BTC mining industry — including the hiccups and lessons management faced along the way (as well as peer comparisons) — check out this deep dive → How IREN Conquered the BTC Mining Industry.

IREN’s AI Segment

IREN officially launched its AI segment in late 2023 with the acquisition of a few hundred NVIDIA H100 GPUs — capacity that was contracted to the AI startup Poolside in early 2024.

Since then, IREN has scaled its cloud capacity considerably, with the company now holding a total fleet of 23,300 GPUs:

1.9k NVIDIA H100s & H200s

19.1k NVIDIA B200s & B300s

1.2k NVIDIA GB300s

1.1k AMD MI350Xs

Most of IREN’s recent surge in fleet capacity occurred in recent months, and much of it can be attributed to the company’s new CFO, Anthony Lewis, who initially joined IREN in early July 2025 as Chief Capital Officer and was promoted soon after.

Lewis has an extensive financing background, spending 22 years at Macquarie Group — most recently as Co-Treasurer — where he was responsible for global funding, liquidity, and capital management.

The reason he has been so instrumental in recent months is simple: IREN’s AI cloud offering never lacked demand, but servicing that demand is extremely capital-intensive due to hardware costs. Single GPUs can run upwards of $40k, and super-chips such as the GB300 are around $75-80k per unit.

Anthony was brought in to engineer capital-efficient ways to scale IREN’s fleet without over-leveraging the balance sheet — which is exactly what he accomplished with the GPU-leasing model IREN now uses. GPU leasing involves renting hardware from vendors over a fixed period (e.g., 24–36 months) instead of purchasing it outright. At the end of the term, there is a buyout option at fair market value (FMV): either a nominal $1 — meaning IREN effectively paid the full purchase price through lease payments — or a predetermined percentage (e.g., 15–20%) of the initial price. The cost of this capital-light structure is the interest paid to the vendor, which currently sits in the high single digits (~9%).

Fun fact: I’ve heard IREN is gaining a reputation in Australia for “sniping” top Macquarie talent, with much of the executive team having deep ties to the country’s largest investment bank.

Over the coming year, IREN’s cloud capacity will grow considerably, with management guiding to a fleet of 140k GPUs by the end of 2026.

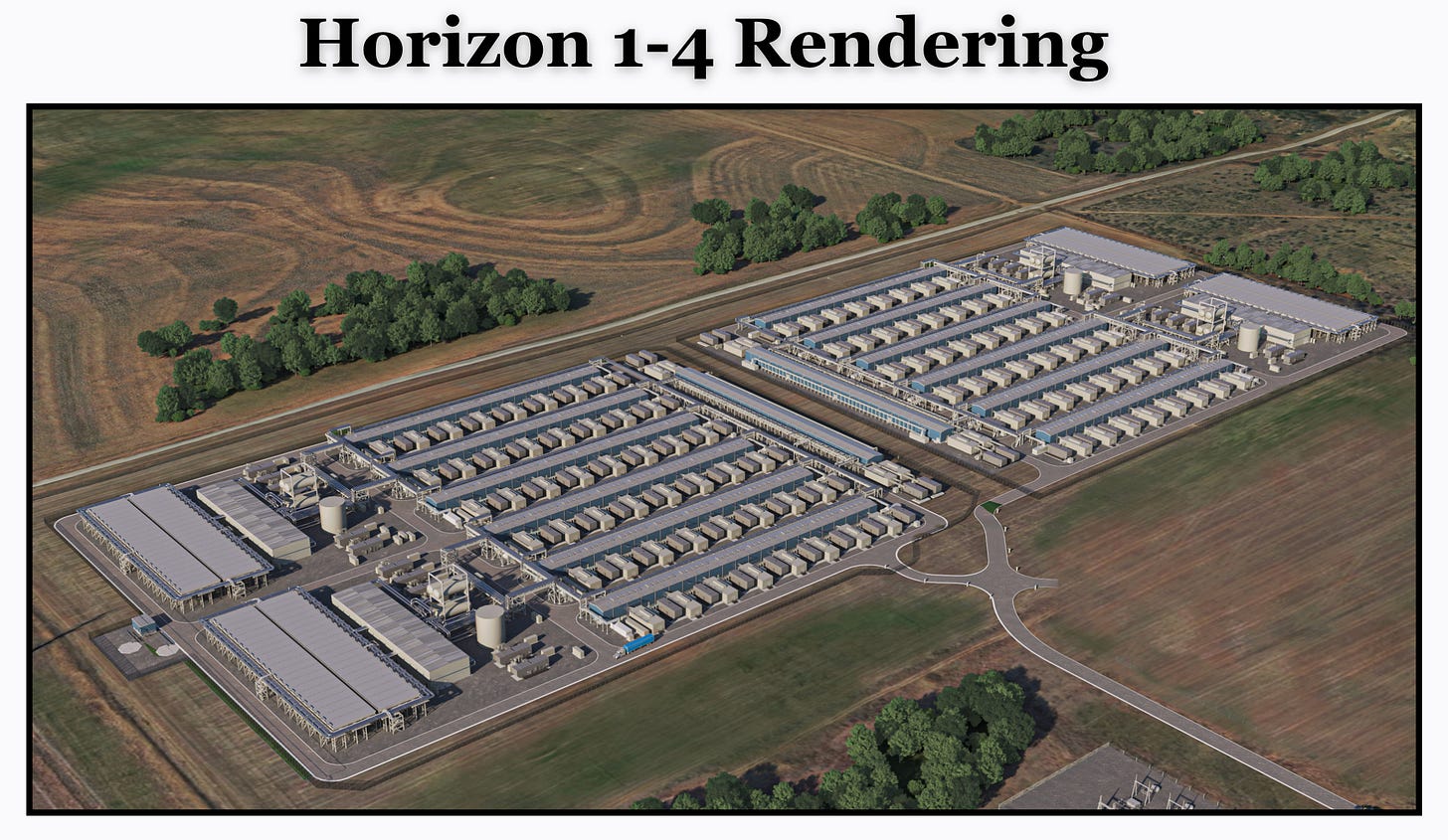

Much of that increase is tied to the company’s $9.7 billion deal with Microsoft at IREN’s Childress campus. This five-year cloud agreement encompasses 76,000 GB300 GPUs, to be installed across the newly built Horizon 1–4 facilities throughout 2026.

For the full breakdown of this monumental partnership between IREN and Microsoft, check out my comprehensive analysis (available very soon) —> Unpacking the $9.7b IREN × MSFT Deal.

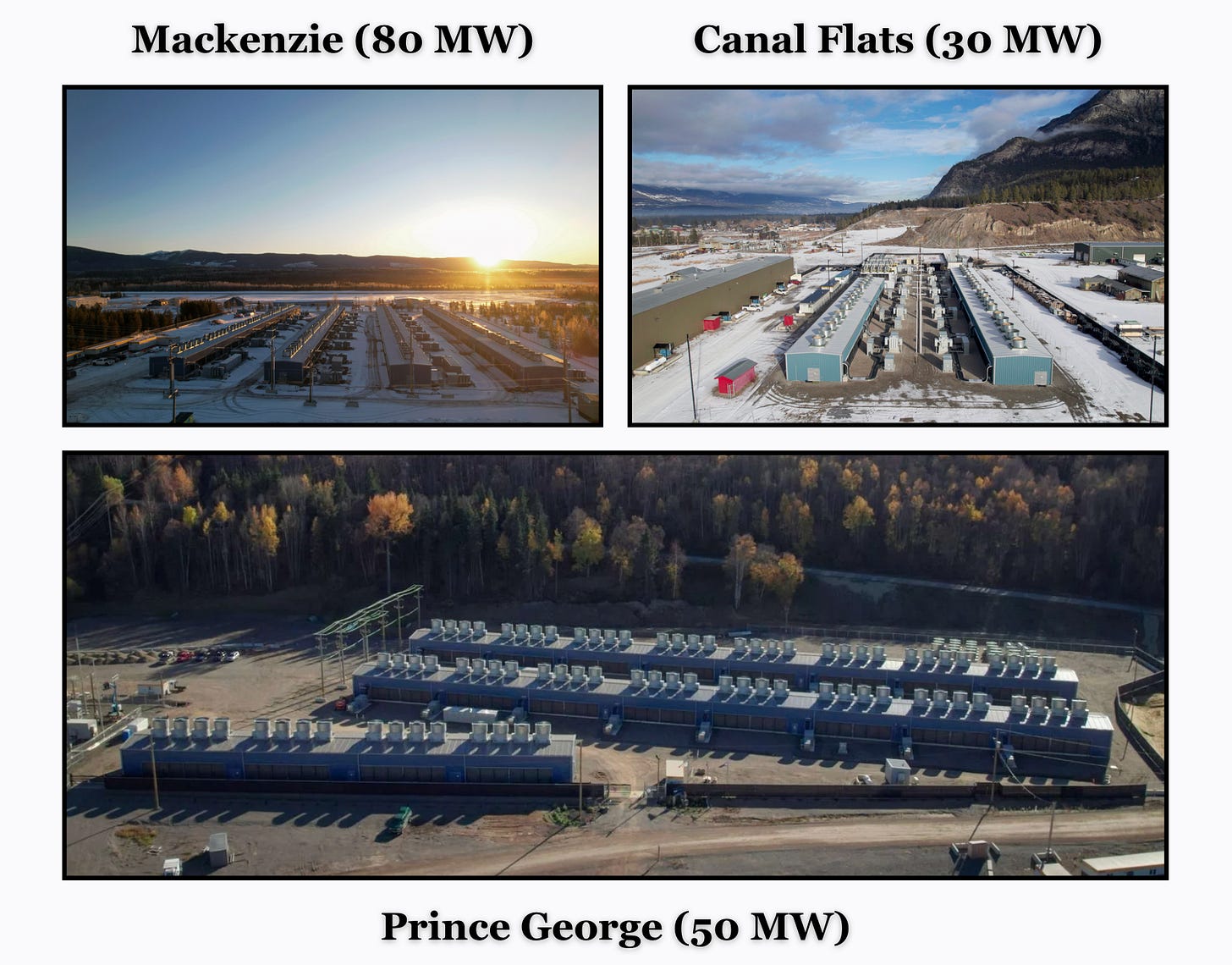

IREN also plans to acquire ~40k additional GPUs over the course of 2026 for its Canadian data centers. Management has guided that the vast majority of this capacity will consist of air-cooled B200/B300 models — an expansion few neo-clouds, or even hyperscalers, can pull off in North America for reasons I’ll detail later in this deep dive.

It’s apparent that IREN’s rapid ascent in the AI cloud market is nothing short of impressive — but do they truly have a durable edge, and how competitive is their offering?

In short: extremely competitive.

Running a GPU cloud service has strong parallels to BTC mining — both are highly capital-intensive and plagued by rapid hardware depreciation cycles. In BTC mining, ASICs typically need to be replaced every 3–5 years. In the CSP business, GPUs may remain profitable for a similar timeframe — primarily because newer generations render the old ones redundant. That means you’re constantly racing the clock to earn a return before the hardware becomes obsolete.

In the cloud space, there are two main paths to profitability:

Pricing Power — something currently reserved primarily for hyperscalers like AWS, Azure, Google Cloud, and Oracle

Cost Efficiency — achieved through low operating and energy costs

Pricing power primarily comes from 2 verticals: reliability (measured in uptime) and added service, usually in the form of software solutions offered to the clients. On the other hand, cost efficiency primarily comes from vertical integration of the physical infrastructure; think owning the data center and thus not having to pay costly colocation fees, as well as running tight operations. Having access to cheap energy also plays a factor, but not nearly as much as being able to avoid colocation rent.

The typical hyperscaler achieves profitability through immense pricing power, derived from a vast ecosystem of software solutions and add-ons. For them, cost efficiency is secondary, since they can often charge more than double the rates of the broader market. Their gross margins are naturally wide due to this pricing advantage, and these megacorps frequently lease white-label cloud capacity from reputable providers (think IREN × MSFT, or NBIS × MSFT) or rent data center capacity from colocation providers (think AWS x CIFR).

The rest of the market competes almost entirely on cost. While a few neo-cloud competitors are trying to differentiate with added software solutions, none has achieved meaningful pricing power so far.

So where does IREN fit in?

IREN is on its way to becoming the hyperscaler for hyperscalers.

IREN is all about scale; its biggest challenge is monetizing its vast power portfolio, as quickly and as capital-efficiently as possible. Idle power earns nothing. There’s no point catering to clients that need software solutions, as that segment usually consists of small to mid-sized enterprises that rarely need tens of megawatts, let alone hundreds. Moreover, the likes of Google, AWS, and Microsoft already dominate that space, so pushing software orchestration would pit IREN head-to-head with the world’s largest tech firms.

IREN is targeting the big fish — large-scale enterprise clients, and even other hyperscalers, that don’t need help on the software side. This target audience needs reliable compute for internal use, or they want to layer their own software on top of IREN’s capacity and resell that repackaged compute to smaller clients who do need ‘extra help’.

In other words, IREN offers bare-metal compute in bulk at very competitive prices. CCO Kent Draper and Co-CEO Dan Roberts articulated this market strategy very well on the recent Q3 earnings call:

Because IREN controls the entire physical infrastructure stack, its gross margins are among the highest in the industry — around ~90%. Of course, gross margins aren’t everything; the biggest expense for any cloud provider is depreciation on short-lived GPUs. But everyone faces that same constraint.

The point is: given heavy depreciation, you survive in this industry via 1) pricing power or 2) cost efficiency. Since IREN avoids major expense items like colocation fees — which can eat up ~20% of gross margin — it holds a distinct advantage in the long-term durability of its model.

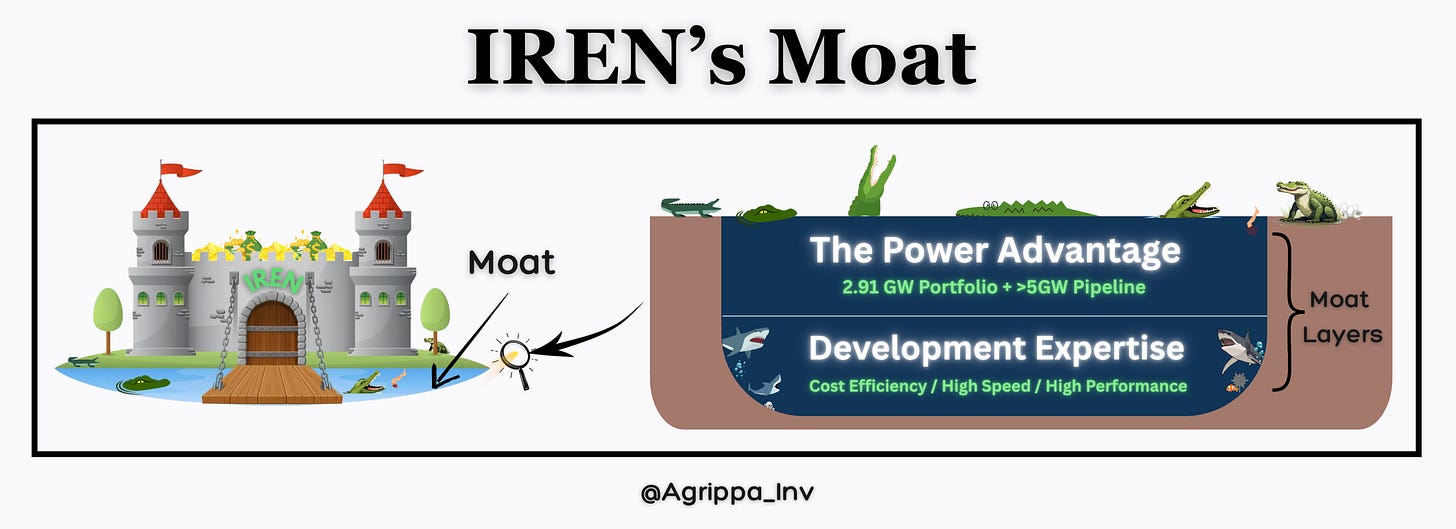

To fully assess IREN’s long-term potential, we now need to examine the company’s competitive moat — which is exactly what I’ll do in the following sections.

The Power Advantage

You may ask yourself why IREN’s grid-connected power portfolio is such a big deal. Can’t neo-clouds like CoreWeave or hyperscalers like Oracle just throw their weight & capital around to secure an equal amount of power?

In short: no.

You can’t just build a data center and expect to plug it into the grid. You need permits and approvals from local grid operators. The problem is that there’s a severe shortage of power, with electricity demand (primarily from data center growth) vastly outpacing current supply.

While new energy generation is ramping up, the demand curve is steepening even faster, resulting in a supply crunch that shows no signs of easing anytime soon.

Before the recent AI boom — which dramatically accelerated power demand — data center developers traditionally flocked to established markets like Northern Virginia, Silicon Valley, and Chicago. These regions already had mature infrastructure consisting of dense fiber networks, robust power grids, and proximity to enterprise demand.

But most of these regions are now completely tapped out when it comes to new power allocations for large-scale data center projects. This shortage is forcing developers to go wherever power is still available. That alone marks a major industry shift.

The region that’s emerged as an obvious choice is Texas, particularly West Texas, where there’s still some power “up for grabs”. That’s where Stargate’s (i.e., OpenAI’s) first 1.2 GW mega-site is being developed. At this point, permitting queues across the U.S. — including West Texas — have become extremely congested, and securing new grid-connected power now takes roughly 5-7 years.

Many developers openly claim to have gigawatts of power capacity, but when you read the fine print, that capacity often hinges on pending grid approvals.

In contrast, IREN’s management is more discreet when it comes to disclosing its power pipeline. They don’t publish estimated figures and only confirm that it’s in the “multi-GW” range. Their rationale is simple: they view energy access as a binary — you either have grid approval, or you don’t. As such, they only announce new sites once approvals are locked in. Without grid approval, a site is essentially worthless — you can’t build data centers on it.

They’ve emphasized that, in today’s environment of structural power shortages, any pipeline lacking grid approvals carries high uncertainty. That said, my leads suggest IREN has a pipeline of over 5 GW, on top of the 2.91 GW it already has secured.

It’s also important to distinguish between self-owned power connections and “contracted power”. Many cloud providers claim to have multiple gigawatts of connected power, and while that may be technically true, much of that capacity isn’t actually theirs — it’s leased from third-party powered-land and data center developers.

Many investors still struggle to differentiate between self-owned and leased power capacity — failing to appreciate the advantages of the former.

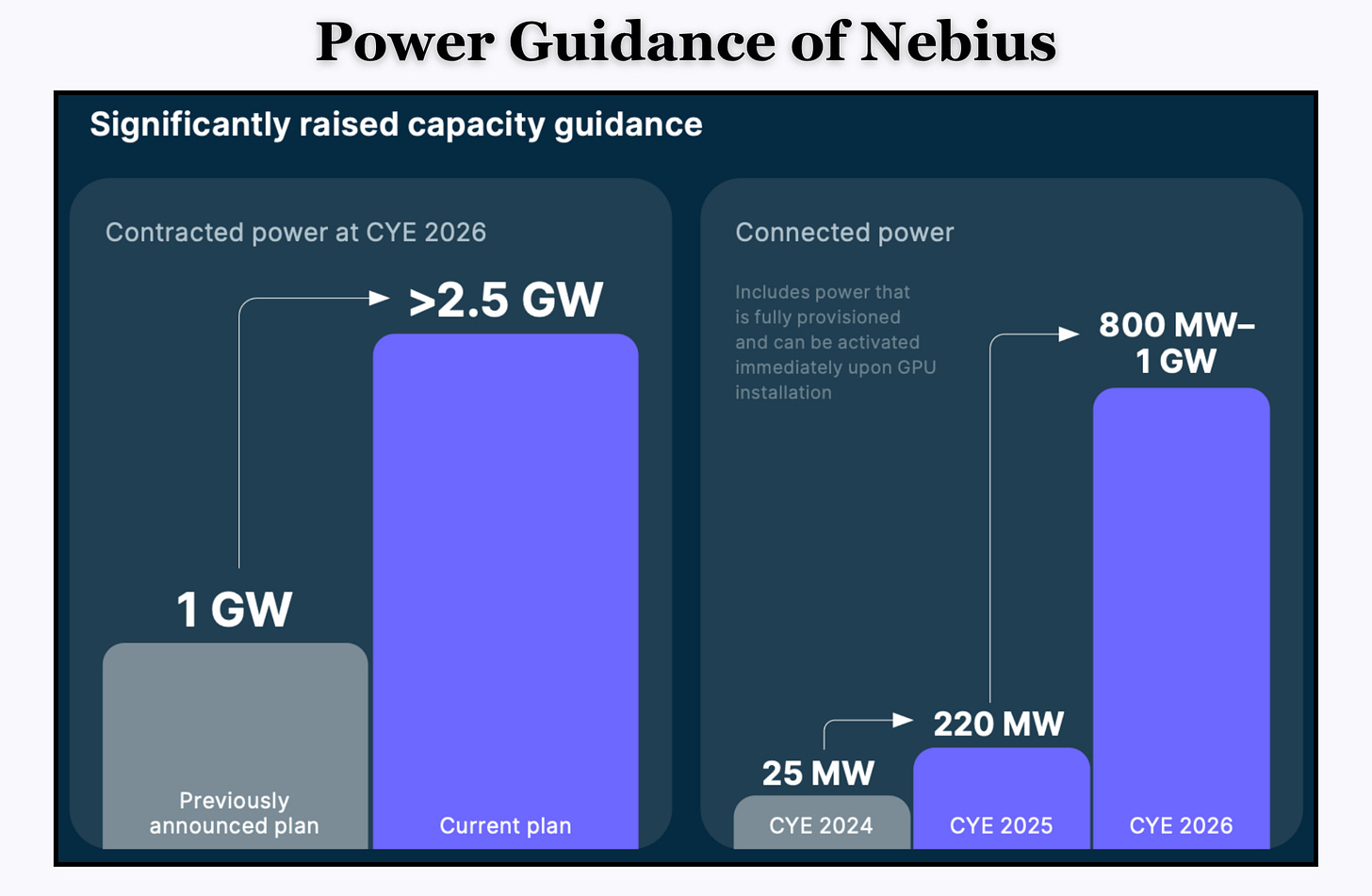

Take Nebius as an example. The company recently unveiled a major increase in its 2026 guidance for contracted power, aiming to secure more than 2.5 GW by next year.

Some investors may look at this and conclude that IREN’s power-related moat isn’t a meaningful advantage in today’s market.

That couldn’t be further from the truth.

Nebius is currently facing a severe shortage of power it has “on hand” — meaning available today. Don’t take it from me; take it directly from the company’s CEO, Arkady Volozh, who said the following on the most recent Q3 earnings call:

“Capacity today is the main bottleneck to revenue growth. And we are now working to remove this bottleneck.”

This bottleneck is so severe that Nebius’s recently announced $3 billion Meta deal was limited to the power the company currently has available:

“The size of the contract was limited to the amount of capacity that we had available, which means that if we had more, we could have sold more.” (CEO Arkady Volozh)

This case study shows the true value of IREN’s fully owned power pipeline. If it were easy to “just get power”, Nebius would have solved that bottleneck already and would not have been constrained when contracting large-scale deals.

Another key distinction is the margin advantage that comes from owning the power and data center infrastructure rather than leasing it. As noted earlier, IREN’s gross margins are among the highest in the neo-cloud cohort (greater than 90%), while operators such as Nebius and CoreWeave report gross margins in the low-70% range — primarily because they pay significant colocation fees to providers like DataOne or Core Scientific (CORZ).

Getting back to IREN’s pipeline, the company now has a consistent record of annual site additions and capacity expansions. The most recent addition was the 600 MW Sweetwater 2 site near Abilene, announced earlier this year. Prior to that, in 2024, the company secured 1.4 GW at its Sweetwater 1 site (also near Abilene) and expanded Childress from 600 MW to 750 MW through a 150 MW increase in approved grid capacity.

Image by Frans Bakker

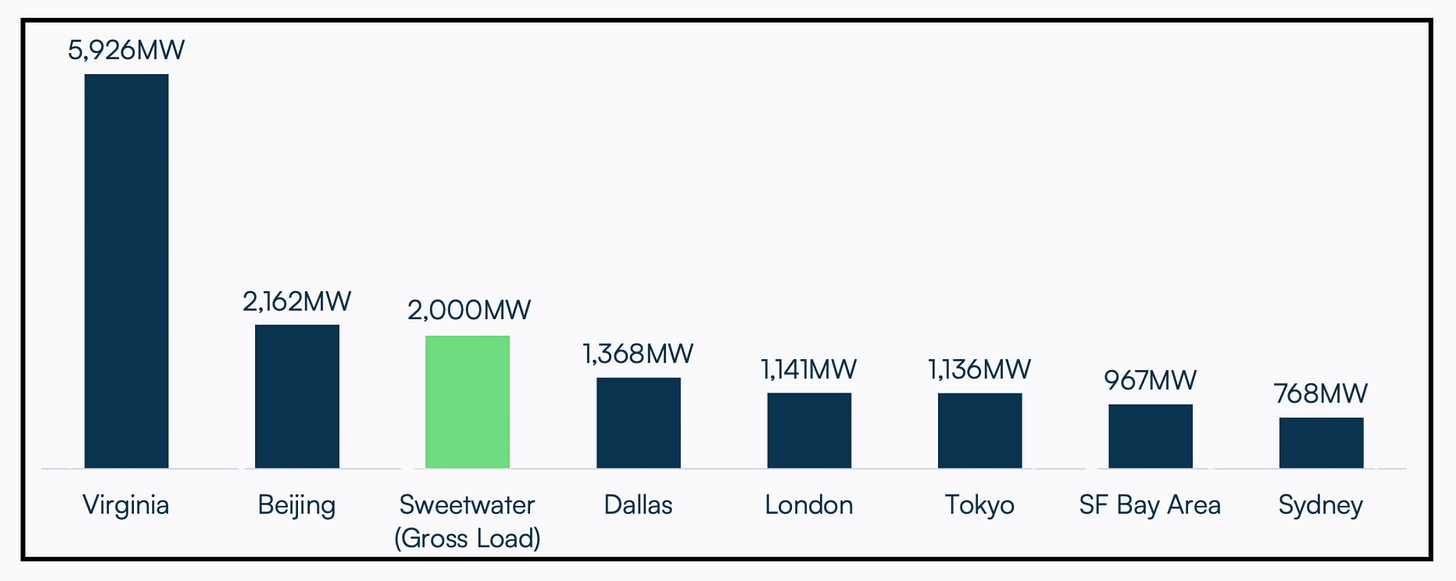

To contextualize just how large IREN’s powered land portfolio is, consider this: one of the largest data center markets in North America — Chicago — has an operational capacity of just ~1.2 GW. That’s an entire market made up of hundreds of individual data center sites. The largest data center market in the world is Northern Virginia, specifically Ashburn — also known as “Data Center Alley” — with an operational capacity of around 5-6 GW. IREN’s powered land portfolio is already nearly 60% the size of this entire market, with its Sweetwater 1 & 2 campuses alone equivalent to as much as one-third of that scale.

So how did IREN manage to secure a record-breaking 2.91 GW?

Because the company moved early.

IREN’s management had the foresight to secure massive amounts of power long before the AI boom — years before ChatGPT launched and kicked off the gold rush for compute infrastructure. Co-CEO Dan Roberts has repeatedly spoken about his and his brother’s early vision of the world digitizing at an exponential pace — with the physical power infrastructure failing to keep up.

That vision led to a bold bet: real-world assets like grid-connected land and the data centers on top of it would become the rarest and most valuable resource in tech.

Most people are unaware of just how deep Dan Roberts’ background in power infrastructure runs and how critical that experience has been to IREN’s ability to secure utility-scale capacity. Before co-founding IREN, Dan was a founding executive and Investment Director at Palisade Investment Partners, an infrastructure investment firm managing over $6 billion across 20+ asset businesses, including wind farms, gas pipelines, and other energy infrastructure.

Long before the AI race began, IREN’s founders understood that access to power would become the ultimate bottleneck in a digitizing world. That foresight — paired with Dan’s deep infrastructure expertise — is a big reason why IREN was able to quietly amass 2.91 GW of permitted and grid-approved power.

The company now holds one of, if not the, largest powered land portfolios on the planet.

In short, IREN is much more than just a data center developer — it’s arguably one of the world’s best developers of powered land. That means securing large land parcels, negotiating directly with utilities and grid operators, building high-voltage substations, and navigating multi-year permitting and interconnection processes.

It’s a capital-intensive, highly specialized discipline — one that most modern data center developers no longer touch. IREN does it all in-house, and at massive scale.

To illustrate how unique IREN’s position is as a data center developer with gigawatts of self-owned, fully permitted capacity, let’s consider the following example: Most readers are probably familiar with the Stargate alliance between Oracle, OpenAI, and SoftBank. Their first 1.2 GW site in Abilene is being developed by Crusoe. But Crusoe doesn’t own the powered land — it leases the land from a company called Lancium, which specializes in acquiring and developing grid-connected land.

Lancium’s entire business model is focused on that first step: sourcing and entitling powered land. It monetizes this capability by leasing the land out to operators like Crusoe.

That’s a viable standalone model. But for IREN, this is only step one. Unlike Lancium, IREN prefers to own and build on top of its powered land, moving up the value chain and capturing far more value per megawatt. At the same time, it avoids having to lease or acquire powered land at rising market rates — a major advantage in the face of an intensifying power crunch.

This directly translates into higher margins, faster development timelines, and superior returns on invested capital.

Moreover, IREN has strategically secured power in regions with exceptionally low electricity costs, typically in remote areas with abundant renewable generation like wind or solar. At the company’s flagship 750 MW Childress site, all-in electricity costs consistently range between 2.8–3.5 cents per kWh — remarkably low by industry standards. A key factor here is IREN’s optimized energy curtailment strategy: during brief price spikes (usually lasting just minutes or hours), the company shuts off its BTC mining operations to avoid high-cost intervals.

For AI workloads, where uptime must remain near 100%, curtailment isn’t a viable option — but even without curtailment energy costs are likely to average ~5 cents per kWh at IREN’s West-Texas sites. That’s still extremely low compared to major data center hubs like Northern Virginia (6–7 cents) or Silicon Valley (often over 10 cents).

This power cost advantage is especially meaningful for AI cloud, where the host, i.e., IREN, pays for the electricity. And because the energy is fully sourced from renewables like wind & solar, tenants can also claim that their data center capacity is sustainably powered — another very valuable (and often overlooked) selling point for IREN.

Now that you understand IREN’s unique power advantage, here’s an up-to-date overview of the company’s powered land portfolio & data center capacity:

Development Expertise

While IREN’s power advantage is increasingly well understood by investors, I’d argue that few truly grasp the significance of the second pillar of its moat: Development Expertise.

The term ‘development expertise’ is intentionally broad, as it captures several key capabilities that set IREN apart. For one, there are few, if any, data center companies that are this vertically integrated. From developing the greenfield land and energizing it to building full fletched turnkey data centers & running its own GPU cloud — IREN does it all in-house.

Analysts like Antonio Linares have labeled IREN the “Tesla of the data center industry” — and I couldn’t agree more.

Tesla set itself apart by doing nearly everything in-house: from design and battery development to manufacturing, assembly, and even the software layer. This vertical integration allows Tesla to capture more of the value chain than any traditional automaker. Thanks to a world-class engineering culture rooted in first-principles thinking, Tesla has driven efficiency at every level — resulting in lower costs per unit and vastly superior margins per vehicle sold. On top of that, Tesla isn’t afraid to branch out into entirely new verticals, such as Megapacks (utility-scale batteries), FSD (full self-driving), and robotics.

Likewise, IREN does nearly everything in-house and isn’t afraid to branch out into new verticals. They’re not just a BTC miner, or just a cloud provider, or just a colocation operator — they’re steadily evolving into a full-scale data center conglomerate, something that hasn’t really existed in this form before.

IREN is essentially a next-gen infrastructure company that’s breaking the mold, not unlike what Tesla did for autos and energy.

This level of vertical integration naturally compounds cost efficiency. Because IREN builds in-house — rather than relying on third-party developers who need their own margin — its cost structure is essentially materials plus labor. At scale, standardized designs, repeatable workflows, and procurement leverage drive further savings through economies of scale and learning-curve effects, which leads to lower unit costs with each successive build.

Another factor that sets IREN apart from anyone in the industry is its incredibly fast development speed. Being able to build & develop its sites at a very quick cadence is the primary reason why IREN quickly emerged as the fastest-growing BTC miner of all time over the past 24 months. Having the necessary secured power is certainly important, but to truly dominate you need to be able to scale quickly.

IREN has built most of its air-cooled data centers at Childress at a speed of roughly 50 MW per month, a pace that’s 3–10x faster than the typical industry norm — where even experienced developers often average just 5–15 MW/month. Now, as most of IREN’s new builds will be liquid cooled to service next-generation GPU hardware, the construction complexity has increased considerably and development timelines are longer. Nonetheless, IREN still ranks among the very best in this new class of facilities, with Horizons 1–4 (200 MW of critical IT) being developed and delivered to Microsoft over the course of 2026.

It’s worth noting that Horizon 1 (50 MW) began in early 2025, and since the Microsoft signing the facility designs have been materially upgraded. Even assuming Horizon 1 is essentially complete by early 2026, delivering an additional 150 MW of top-tier liquid-cooled capacity within the same year would be a remarkable cadence.

In a compute-constrained market, development speed is crucial.

But IREN’s development edge isn’t just about construction speed or cost efficiency — the real differentiator is the build quality, i.e. the performance of IREN’s data centers. IREN achieved what no other data center provider has in the realm of air cooling — pushing the upper boundaries of what was thought to be theoretically achievable by reaching 80kW of rack density at its Prince George facility in Canada.

Industry norms for air cooling sit well below 30 kW per rack — often in the 15–25 kW range. That’s largely because the traditional data center industry didn’t require this level of extreme cooling capacity prior to the AI boom and thus, as a result, engineering progress in this area plateaued and stagnated over the past decade.

The only notable application that required concentrated power at scale was Bitcoin mining. As a result, a company like IREN, which already pushed the envelope in the mining industry, was uniquely positioned to repurpose its high-performance data centers for GPU clusters.

Industry experts like Brian Fry describe rack density as one of the most critical performance variables in a data center. Higher rack density means more powerful compute packed into tighter spaces — which not only improves efficiency but also directly enhances performance. Lack of adequate rack density is like having sand between gears. If you can’t cool densely packed servers, you’re forced to spread them out. That leads to larger, less compact facilities and creates internal latency problems — the greater the physical distance between compute nodes, the slower the communication between them (= worse performance).

IREN’s Co-CEO Dan Roberts has compared this to how semiconductors improve: as the chips get smaller, transistors are closer together, allowing signals to travel shorter distances and boosting performance. High rack density achieves a similar effect — but at the data center level, where GPU servers need to be tightly packed to maximize throughput.

I’ve heard from highly credible sources that multiple hyperscalers were stunned when IREN told them it was achieving 80 kW per rack in an air-cooled facility.

This edge in air cooling now presents a huge opportunity for IREN’s cloud segment and is one of the core reasons for IREN’s rapid cloud expansion. The previous generation of GPUs — the Hoppers — kicked off a gold rush of new “neo-clouds”, many of whom undercut each other on price. Hoppers required ~40kW per rack, but most of these clouds were renting conventional air-cooled facilities with 20–30 kW rack densities. While this led to sub-par performances across the board, it was still “good enough” to flood the GPU rental market with Hoppers.

However, the newly launched Blackwell generation of GPUs requires far more power. The B300, for example — the most powerful air-cooled Blackwell variant — demands rack densities of 60–70 kW for optimal performance. That effectively locks most of the neo cloud market out of this generation. Their existing infrastructure simply can’t handle the compute intensity of the air-cooled B300 — not even at compromised performance levels of say 50 kW per rack, as there are very few air-cooled data centers on the market today that can support even that level of rack density.

Even high-end providers like CoreWeave, Nebius, or hyperscalers are unlikely to compete in the air-cooled B200/B300 space. Instead, they’ll likely focus on the liquid cooled variants. Liquid cooling enables much higher rack densities, but also comes with significantly higher costs — both in terms of CAPEX and OPEX.

Building liquid-cooled data centers at scale (of which very little capacity exists today) requires enormous capital investment and advanced engineering capabilities. This remains a relatively new concept at the commercial level and only a select group of operators have the technical depth and financial scale to execute it properly. As a result, access to the liquid-cooled GPU variants will be limited to the upper tier of cloud providers.

The new Blackwell generation, in effect, acts as a strong barrier to entry for the entire sector: most providers can’t run the air-cooled B200/B300 models because their existing data centers can’t support the rack densities — and they can’t offer the liquid-cooled GB200/GB300 either, unless they’re willing to spend hundreds of millions building entirely new facilities.

This barrier will most likely constrain supply of the new Blackwell GPUs relative to the previous Hopper generation, leading to higher and more sustained GPU rental prices across the market. In other words, returns will likely be quite lucrative for the few cloud providers that can offer Blackwells.

So why does it make sense for IREN to go after the air-cooled Blackwell segment?

Because, unlike anyone else, IREN already has hundreds of megawatts of air-cooled capacity (once used for BTC mining) that can deliver 80 kW per rack — TODAY. There’s no need for new R&D or retrofitting. The infrastructure is already there. For players like CoreWeave, it makes little sense to build high-end air-cooled data centers from scratch if the industry is likely shifting entirely to liquid cooling over the next 3-5 years anyways. Since IREN already has this air-cooled data center capacity built and available today, it can capitalize on this industry-wide dilemma and be one of the only cloud providers able to offer the far more cost-effective B200/B300 models at scale.

Why are these air-cooled GPU models more cost-effective?

Because air-cooled facilities use less energy, require fewer operational resources, and are generally much cheaper to maintain.

However, IREN is also pushing boundaries in the realm of liquid cooling. Horizons 1–4 are set to house 100 MW superclusters for high-performance AI training with flexible rack densities (130 kW to 200 kW). Few other developers are currently delivering multi-hundred-megawatt campuses capable of up to 200 kW per rack with a flexible architecture that can scale densities up or down to meet client requirements.

In contrast, CoreWeave’s liquid-cooled facilities target rack densities of around 130–140 kW, which is just enough to house the current GB300 Blackwells. However, future GPU generations — like NVIDIA’s upcoming Rubin line — will demand even higher power per rack, meaning CoreWeave’s facilities will need significant upgrades to keep up. Unfortunately for them, just like most neo-clouds, the company is heavily reliant on its colocation partners to carry out those upgrades. They’ll need to knock on the doors of providers like Galaxy Digital or Applied Digital (APLD), to modify infrastructure they don’t control — providers who, in many cases, have little incentive to take on the enormous CAPEX burden without being compensated for it.

And upgrading rack density isn’t a minor tweak. It typically involves retrofitting major elements of the data center, including power delivery systems and cooling infrastructure. In some cases — particularly in older, more rigid facilities — those upgrades may not even be possible without having to tear down most of the existing infrastructure.

CRWV is already feeling the pain of relying on third-party contractors for much of its data center footprint. On the November 10 Q3 call, CEO Michael Intrator flagged delivery delays caused by a developer running behind schedule, which lowered Q4 expectations:

“In our case, we are affected by temporary delays related to a third-party data center developer who is behind schedule. This impacts fourth quarter expectations.”

After the recent Q3 earnings call, CRWV’s CEO went on CNBC and, when pressed by Jim Cramer, admitted that the delays the company was facing were across multiple sites, all tied to a single data center provider: Core Scientific (CORZ).

Notice how CoreWeave’s CEO emphasizes that the hardest part of the cloud business is getting infrastructure online — a point Michael Intrator leans on to justify missed delivery deadlines.

Interestingly, IREN’s CEO Dan Roberts addressed this exact issue on the company’s Q2 (FY Q4) call in late August — comments that, in my view, have aged like fine wine:

While many cloud providers are at the mercy of third-party developers, IREN controls its own destiny: all of its infrastructure is owned and operated in house, with no third-party landlords getting in the way of the development process.

When I visited the Childress site in late May, I asked IREN’s operations executives whether the company is future-proofing its data centers.

Their answer: yes, all of IREN’s infrastructure is highly modular and retrofittable.

For one, they assured me that 100% of the 650 MW (built-out) air-cooled data center capacity at Childress is fully retrofittable for liquid cooling. In fact, retrofitting that built-out capacity — rather than building from scratch — could reduce development time considerably. And even beyond that, all new infrastructure is designed to be both scalable and retrofittable for higher rack density requirements down the line.

IREN’s data center performance edge doesn’t stop at rack density either — it’s also pushing the envelope in internal latency optimization. At the beginning of this post, I explained how IREN strategically locates its data centers on top of major fiber backbones, enabling exceptionally low external latency between its facilities and major interconnectivity hubs like Dallas.

But internal latency — the speed at which data travels between servers and racks inside the data center — is just as important, and in some cases, even more so. In GPU deployments, optimized internal latency is critical because it directly impacts how efficiently data moves between compute nodes. Lower internal latency reduces communication delays, enables faster synchronization, improves throughput, and maximizes hardware utilization — all of which result in better overall system performance for AI workloads. This type of latency optimization depends on careful planning of rack layout, cabling length, and network topology.

And from what I’ve heard, IREN is taking this extremely seriously. Sources tell me they are actively designing their upcoming liquid-cooled Horizon build-outs with internal latency as a core focus.

To illustrate IREN’s competitive edge, I created a visual representation of the 2 layers that make up its moat:

Given the company’s vast power advantage combined with its exceptional development expertise, I expect IREN to emerge as a clear market leader in the data center industry over the coming years.

Wrapping Up

In summary, IREN is far more than just a BTC miner or an AI cloud provider. It is a fully vertically integrated data center conglomerate — something the industry hasn’t seen before.

The company’s massive grid-connected power portfolio of nearly 3 GW — with likely more than 5 GW potentially in the pipeline — acts as a near-impenetrable competitive barrier. This is underpinned by best-in-class development capabilities that enable rapid build outs with industry-leading performance.

Combined, these factors form an incredibly strong moat and pave the way for IREN to establish itself as THE data center company of the coming decade.

While I’m incredibly bullish on IREN, there are lingering concerns and risks across the broader AI ecosystem — a topic I’ll cover in depth in my upcoming deep dive: The AI Bubble (soon available to paid subscribers).

Thank you for reading, Cheers! ✌️

Disclaimer (NFA): This publication is for informational and educational purposes only — not investment, legal, tax, or accounting advice. Nothing herein constitutes a solicitation, recommendation, or offer to buy or sell any security or strategy. The author may hold — and may buy or sell without notice — securities, derivatives, or other instruments referenced. All opinions are the author’s, expressed in good faith as of publication, and subject to change without notice. Information is believed accurate but provided “as is,” without representations or warranties; errors or omissions may occur. Any forward-looking statements involve risks and uncertainties that may cause actual results to differ materially. Past performance is not indicative of future results. Do your own research (DYOR) and consult a qualified, licensed adviser who understands your circumstances before acting on this content. To the fullest extent permitted by law, the author and publication disclaim liability for any loss arising from reliance on this material.

Operating profitability: Some miners present unrealized and realized gains from Bitcoin price movements on their balance sheets as an “operating” item — which can materially inflate quarterly operating income and obscure true operating performance. In practice, these mark-to-market effects are closer to financing or treasury outcomes than core operations. Nonetheless, under GAAP, public companies have latitude in presentation and different expense and gain/loss categories may appear above or below the operating line depending on policy choices. For my claim that no other miner achieved operating profitability this cycle, I exclude all price movements of BTC holdings and assess operating results on a basis that strips out asset remeasurement effects.

Superior quality integration of charts, videos, and pictures! Let's go!

Great article Agrippa! Looking forward to this journey.